AI and the paperclip problem

Philosophers have speculated that an AI tasked with a task such as creating paperclips might cause an apocalypse by learning to divert ever-increasing resources to the task, and then learning how to resist our attempts to turn it off. But this column argues that, to do this, the paperclip-making AI would need to create another AI that could acquire power both over humans and over itself, and so it would self-regulate to prevent this outcome. Humans who create AIs with the goal of acquiring power may be a greater existential threat.

Watson - What the Daily WTF?

Human Compatible: Artificial Intelligence and the Problem of Control by Stuart Russell

Jake Verry on LinkedIn: There's a significant shift towards

Artificial intelligence for international economists (by an

/cdn.vox-cdn.com/uploads/chorus_image/image/72457793/Vox_Anthropic_final.0.jpg)

to invest up to $4 billion in Anthropic AI. What to know about the startup. - Vox

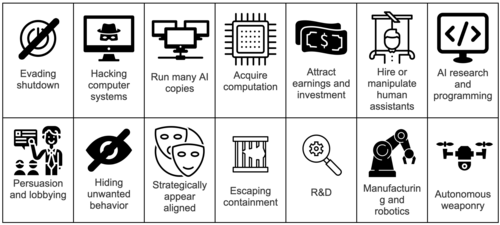

Instrumental convergence - Wikipedia

AI's Deadly Paperclips

The AI Paperclip Problem Explained, by Jeff Dutton

What is the paper clip problem when referring to artificial intelligence? - Quora

What is the paper clip problem? - Quora

Exploring the 'Paperclip Maximizer': A Test of AI Ethics with ChatGPT

AI Alignment Problem: Navigating the Intersection of Politics, Economics, and Ethics

/cdn.vox-cdn.com/uploads/chorus_asset/file/22163884/Screen_Shot_2020_12_11_at_6.31.26_PM.png)

A game about AI making paperclips is the most addictive you'll play today - The Verge

Artificial General Intelligence: can we avoid the ultimate existential threat?

What Is the Paperclip Maximizer Problem and How Does It Relate to AI?