How to Measure FLOP/s for Neural Networks Empirically? – Epoch

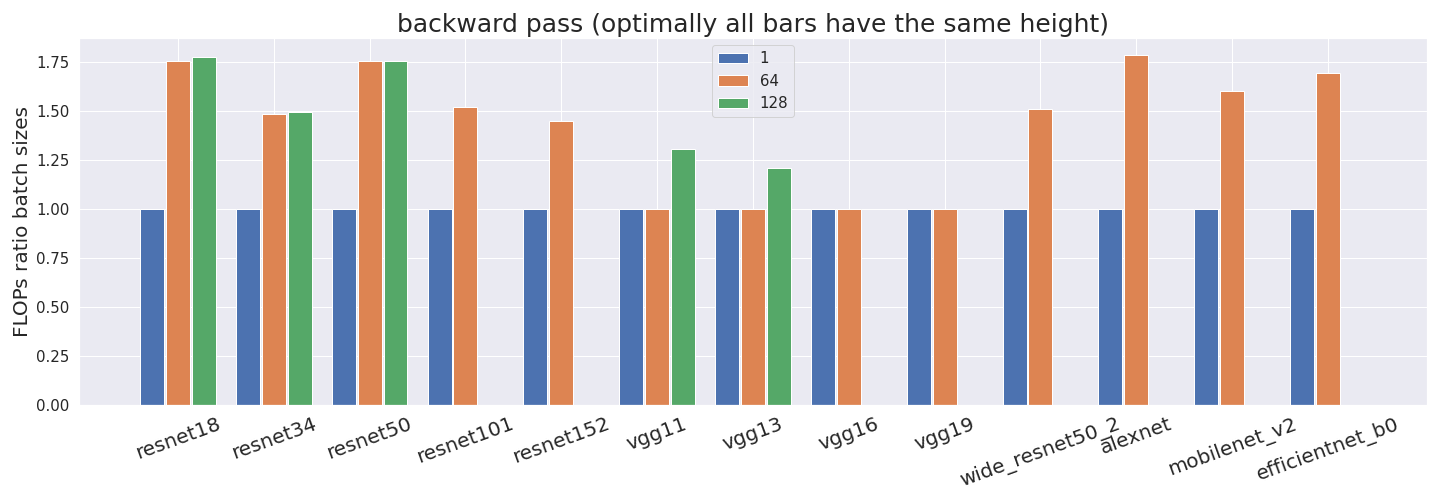

Computing the utilization rate for multiple Neural Network architectures.

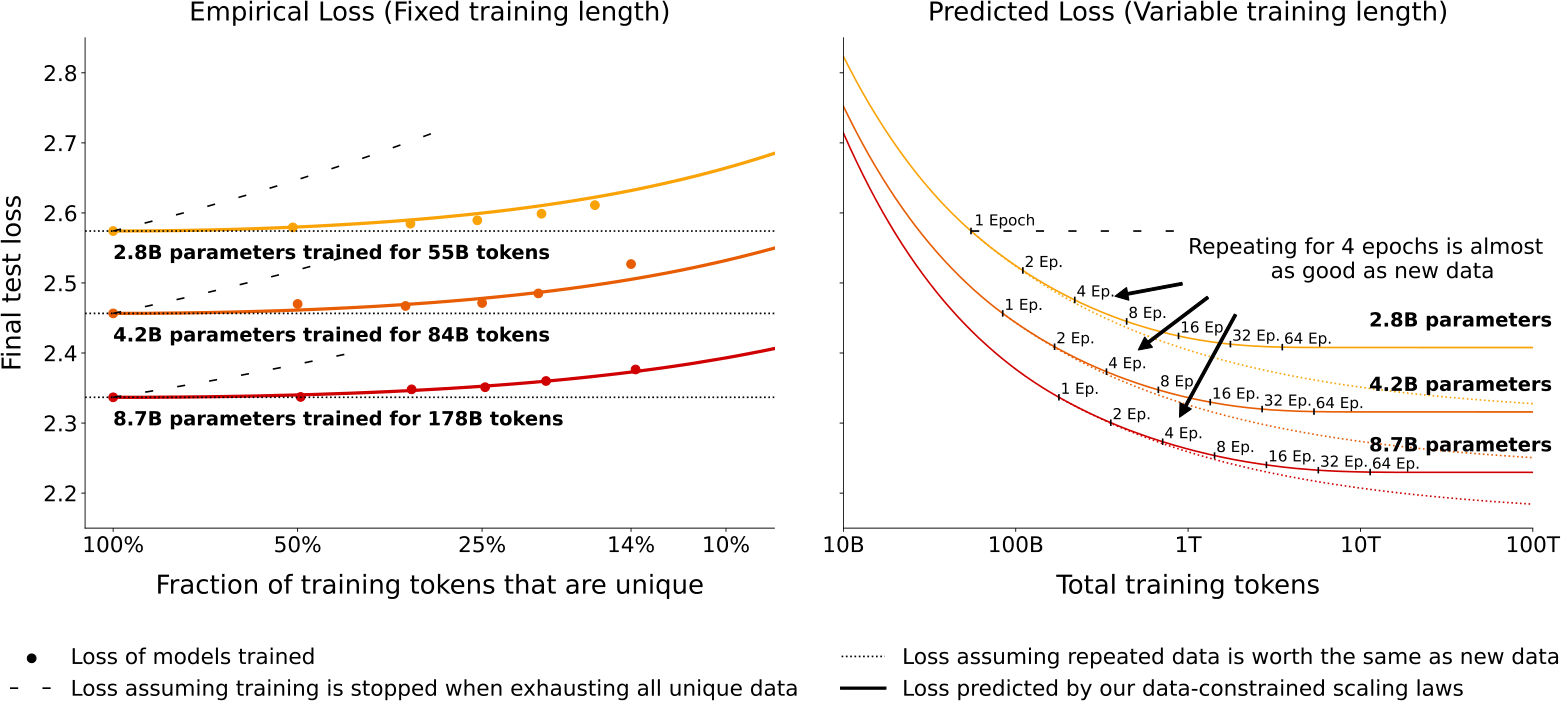

NeurIPS 2023

Assessing the effects of convolutional neural network architectural factors on model performance for remote sensing image classification: An in-depth investigation - ScienceDirect

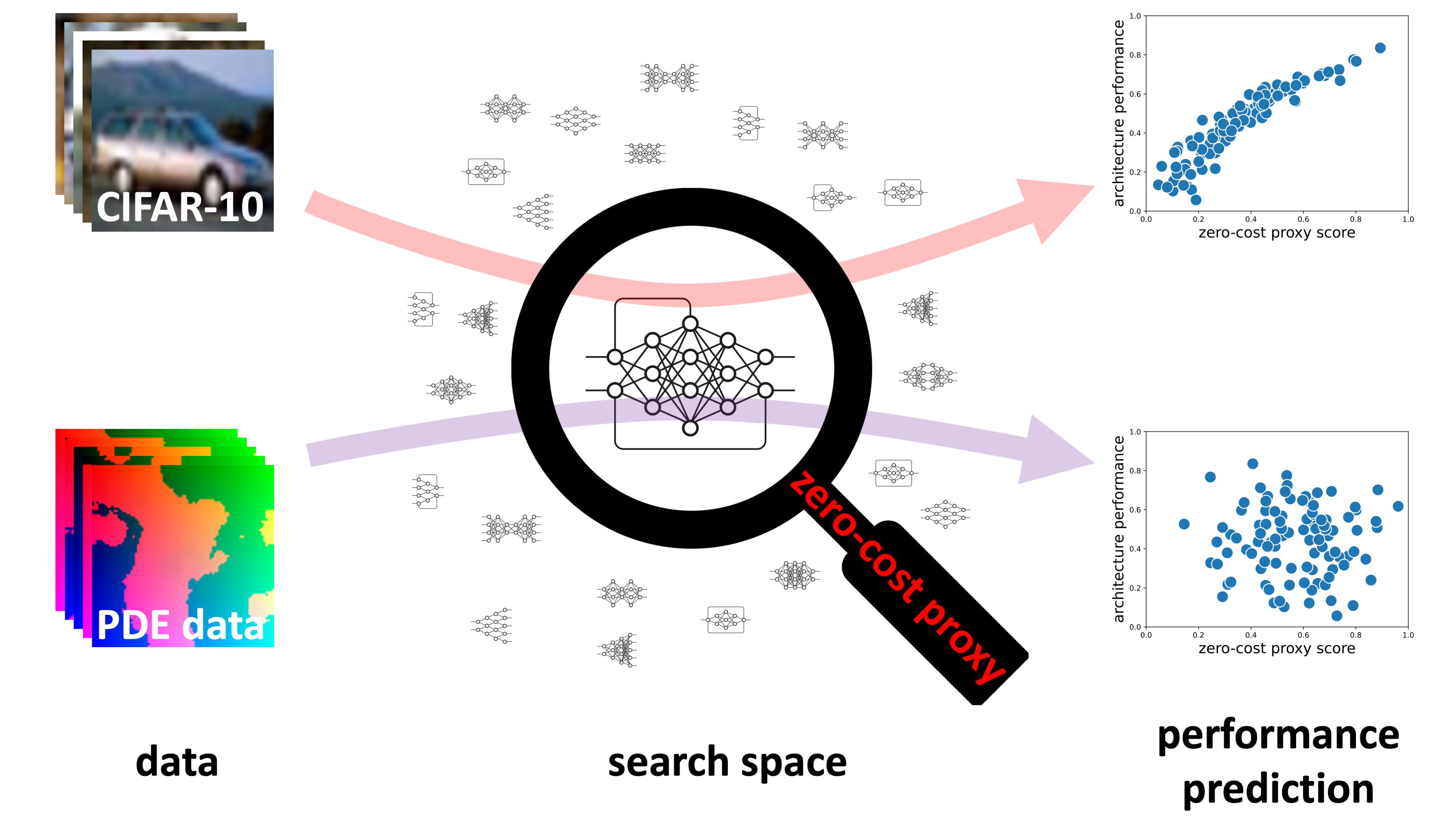

A Deeper Look at Zero-Cost Proxies for Lightweight NAS · The ICLR Blog Track

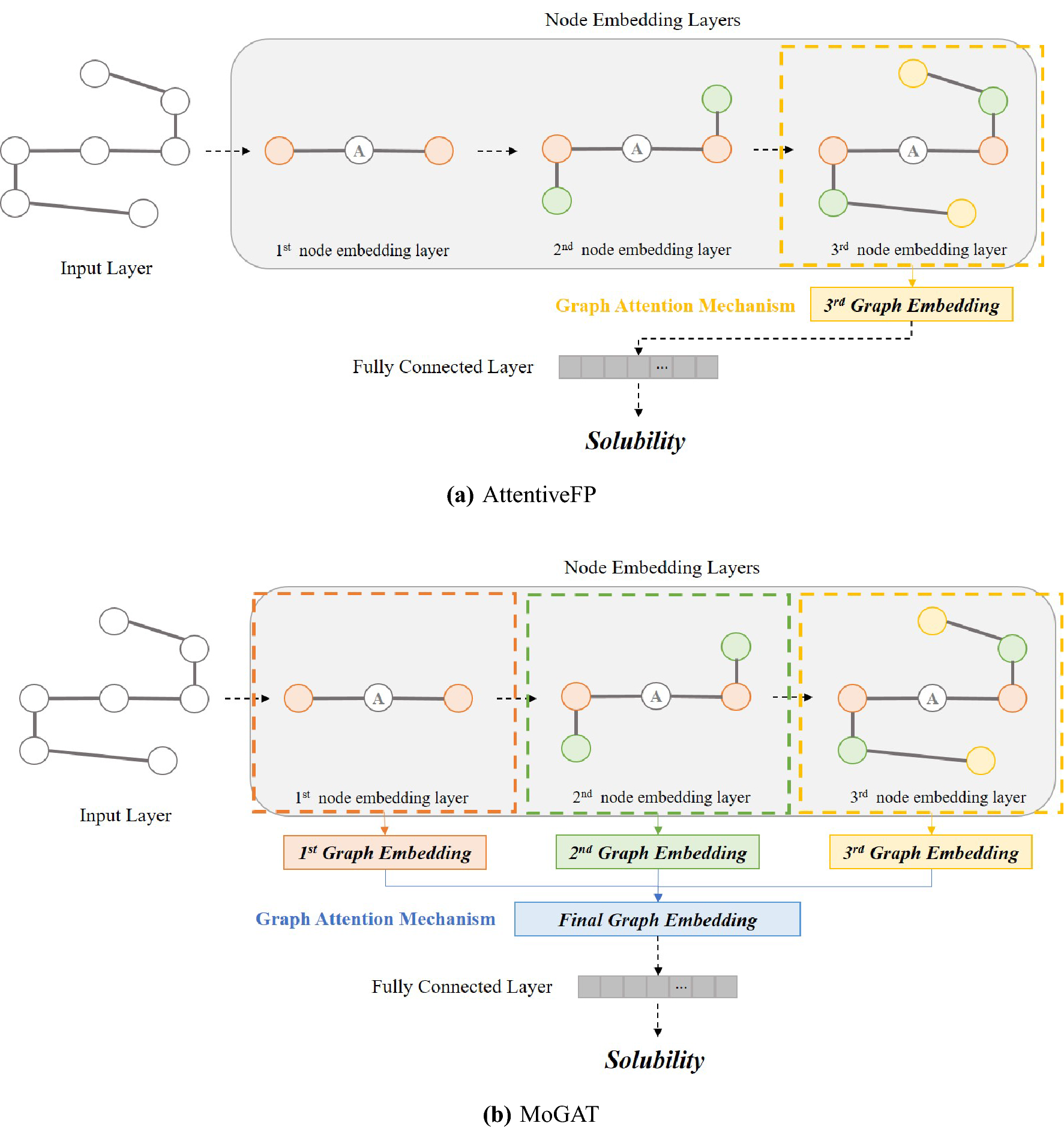

Multi-order graph attention network for water solubility prediction and interpretation

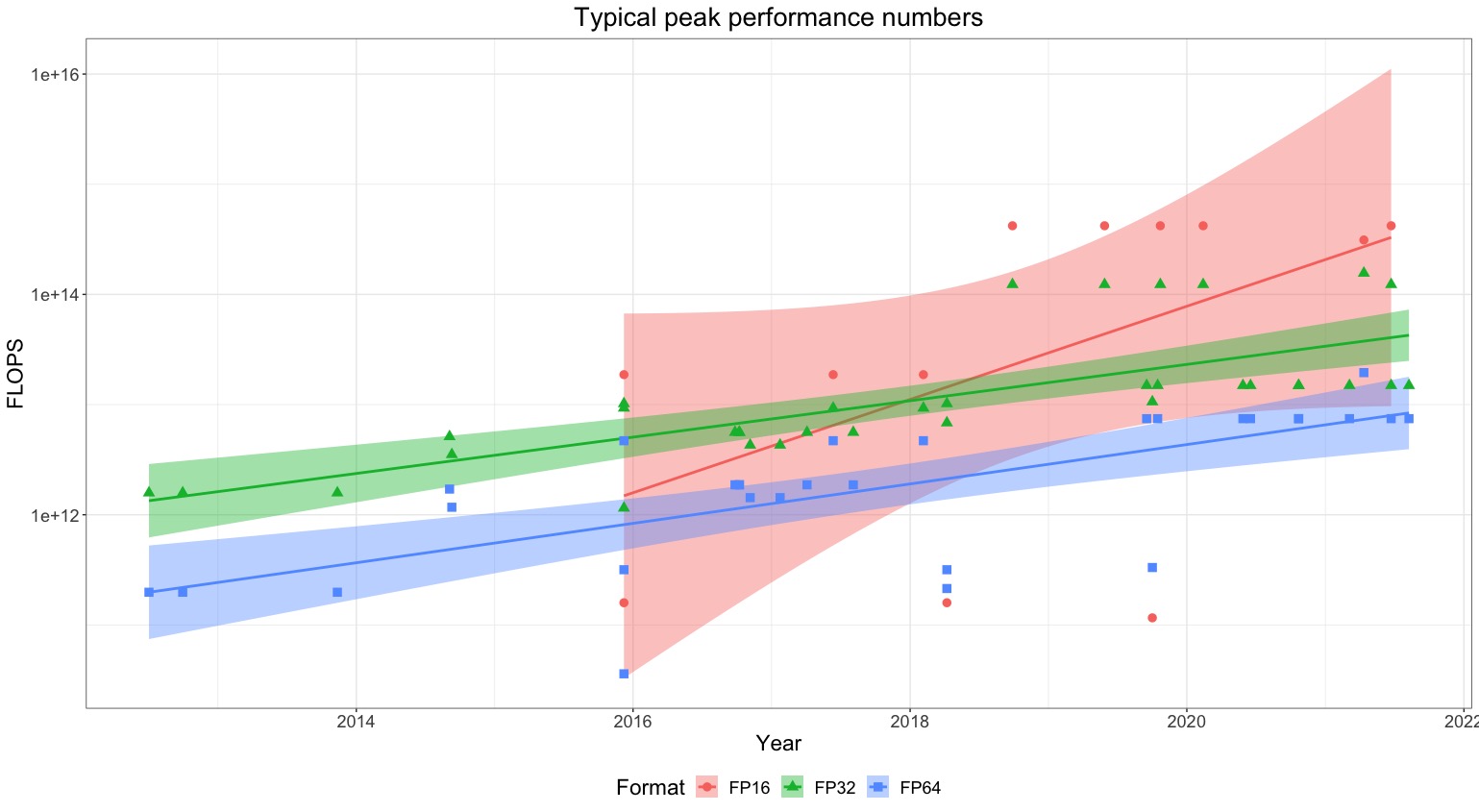

How to Measure FLOP/s for Neural Networks Empirically? – Epoch

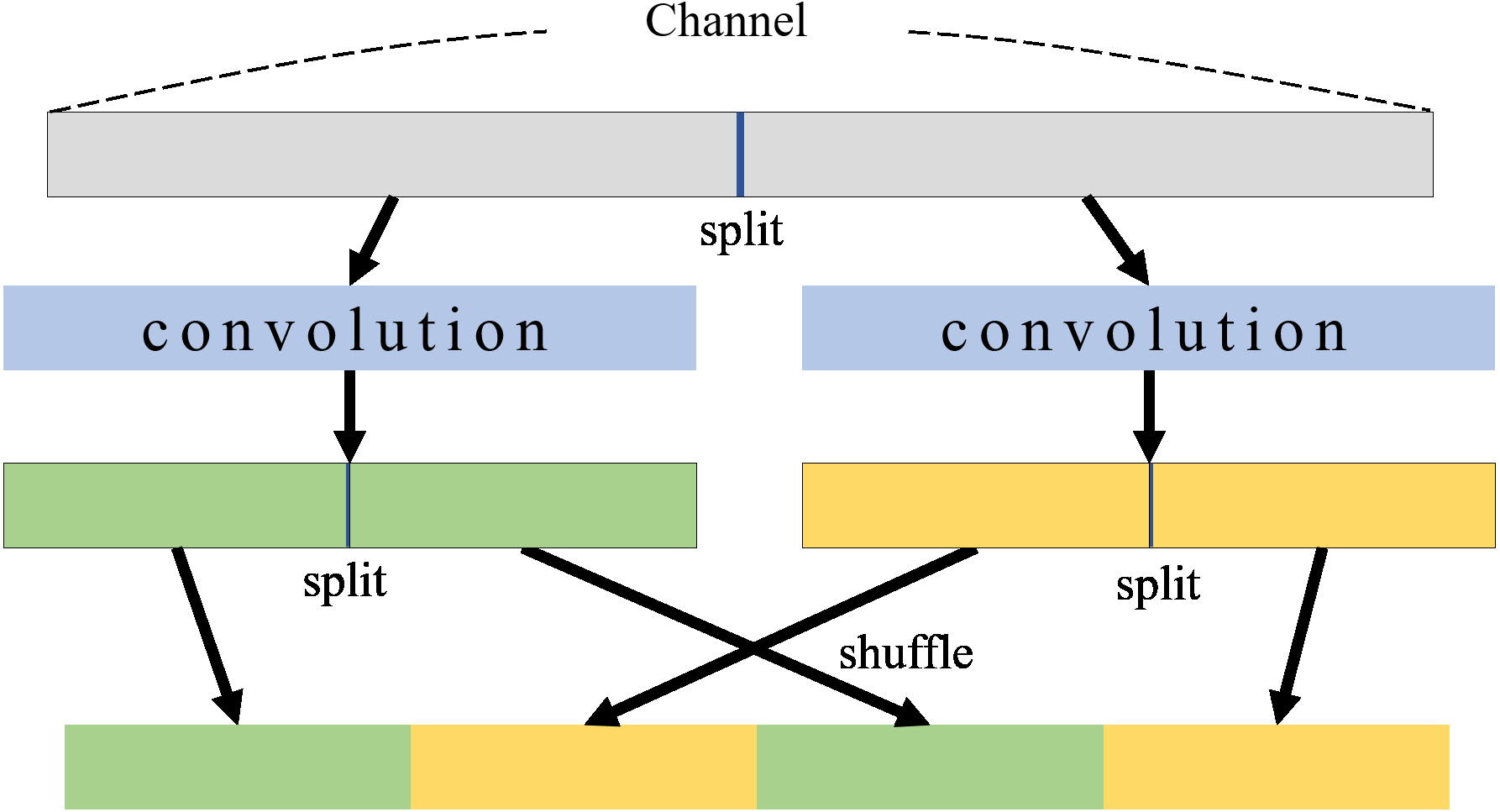

PresB-Net: parametric binarized neural network with learnable activations and shuffled grouped convolution [PeerJ]

When do Convolutional Neural Networks Stop Learning?

CoAxNN: Optimizing on-device deep learning with conditional approximate neural networks - ScienceDirect

The base learning rate of Batch 256 is 0.2 with poly policy (power=2).

8.8. Designing Convolution Network Architectures — Dive into Deep Learning 1.0.3 documentation

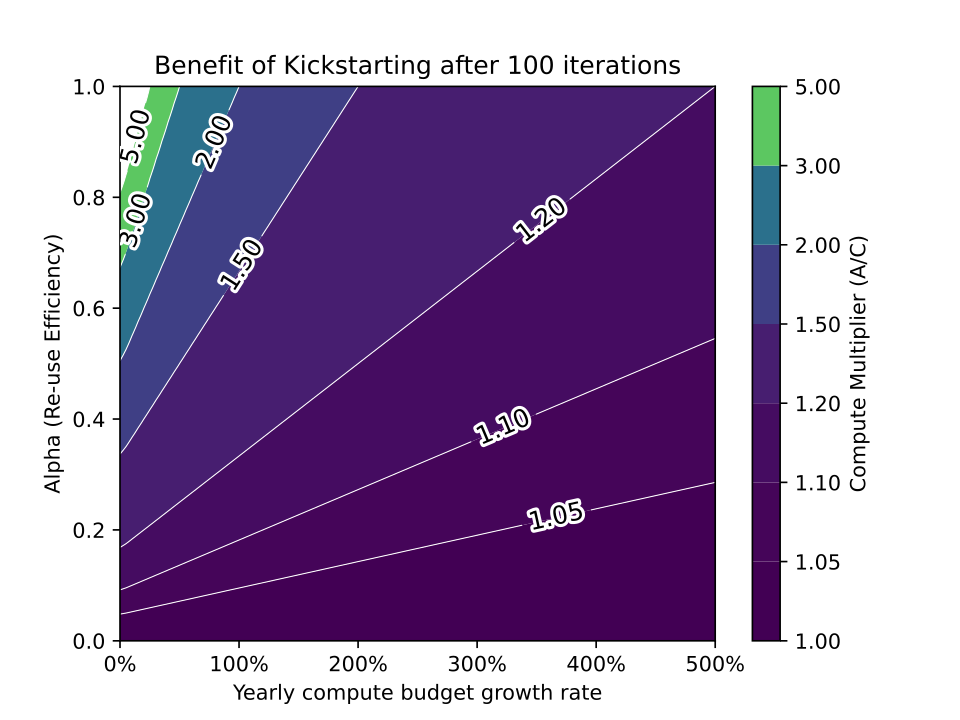

Estimating Training Compute of Deep Learning Models – Epoch