How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase

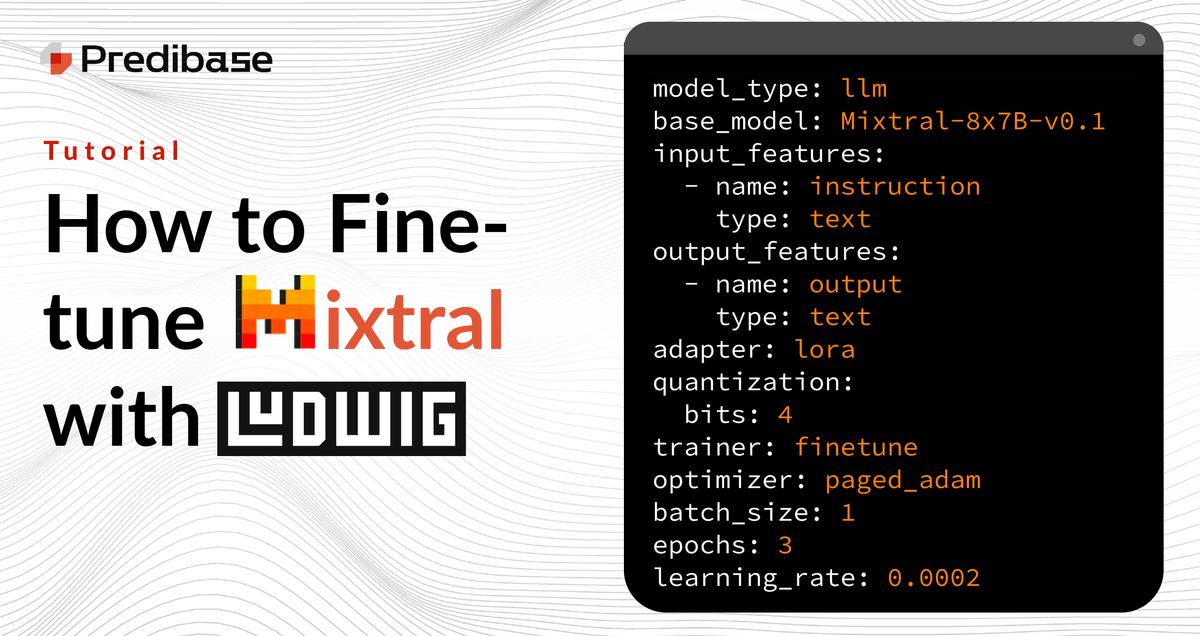

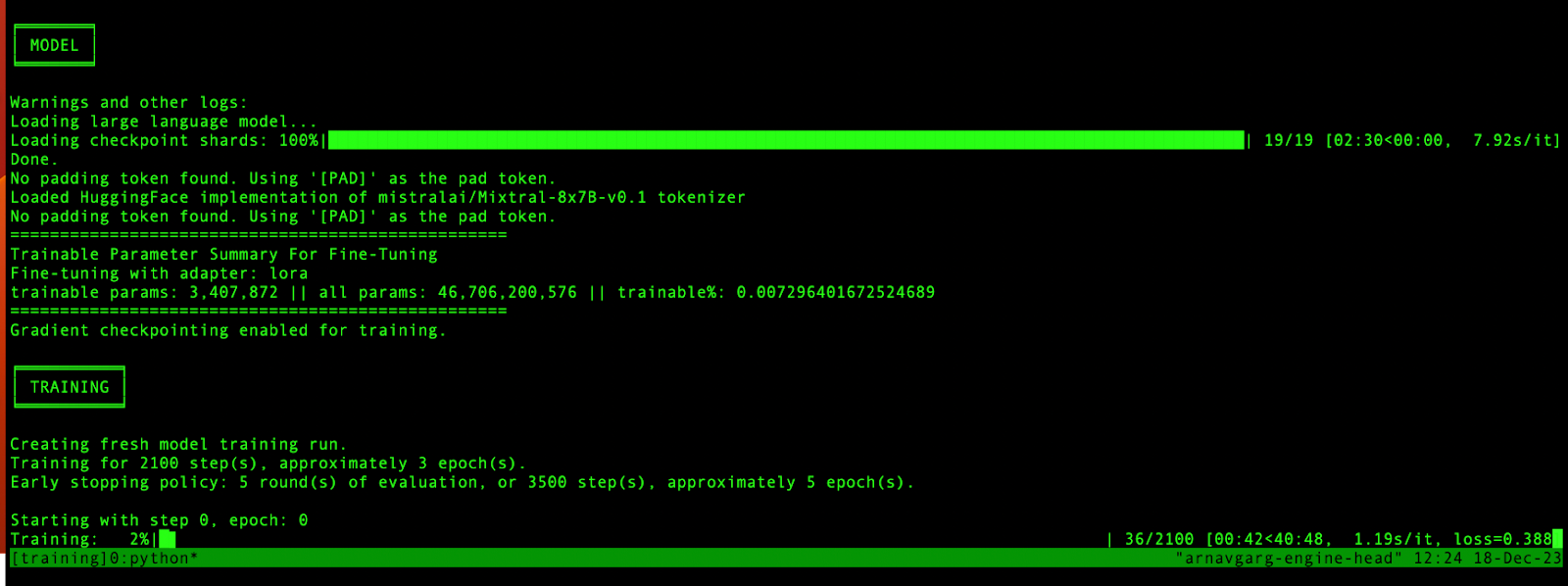

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

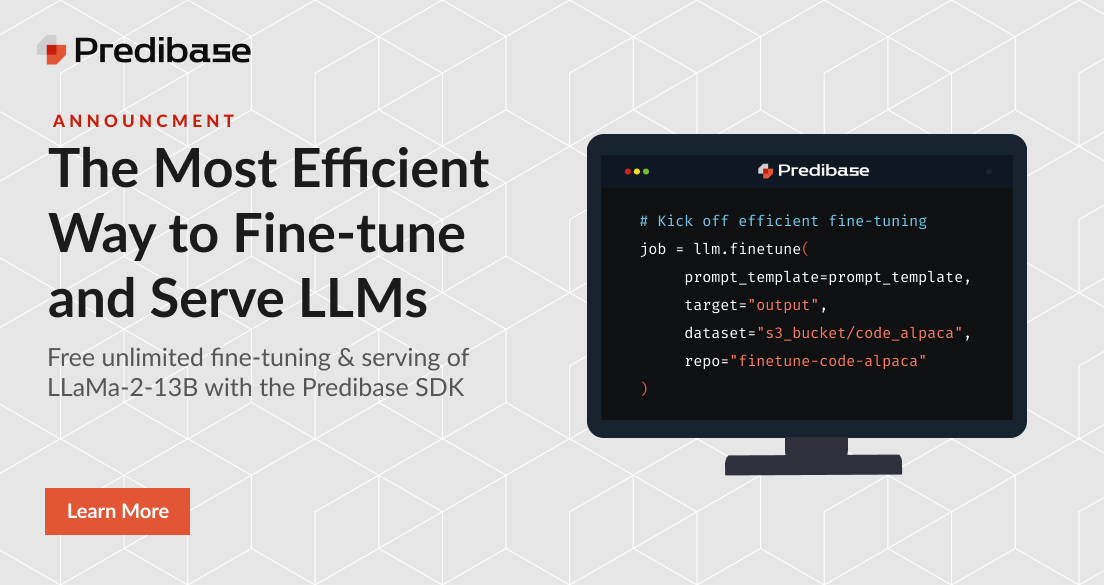

The Fastest Most Cost-Effective Way to Fine-tune and Serve Open

Geoffrey Angus on LinkedIn: Ludwig v0.7: Fine-tuning Pretrained

Deep Learning – Predibase

Fine-tune Mixtral 8x7B with best practice optimization

50x Faster Fine-Tuning in 10 Lines of YAML with Ludwig and Ray

Fine Tune mistral-7b-instruct on Predibase with Your Own Data and

50x Faster Fine-Tuning in 10 Lines of YAML with Ludwig and Ray

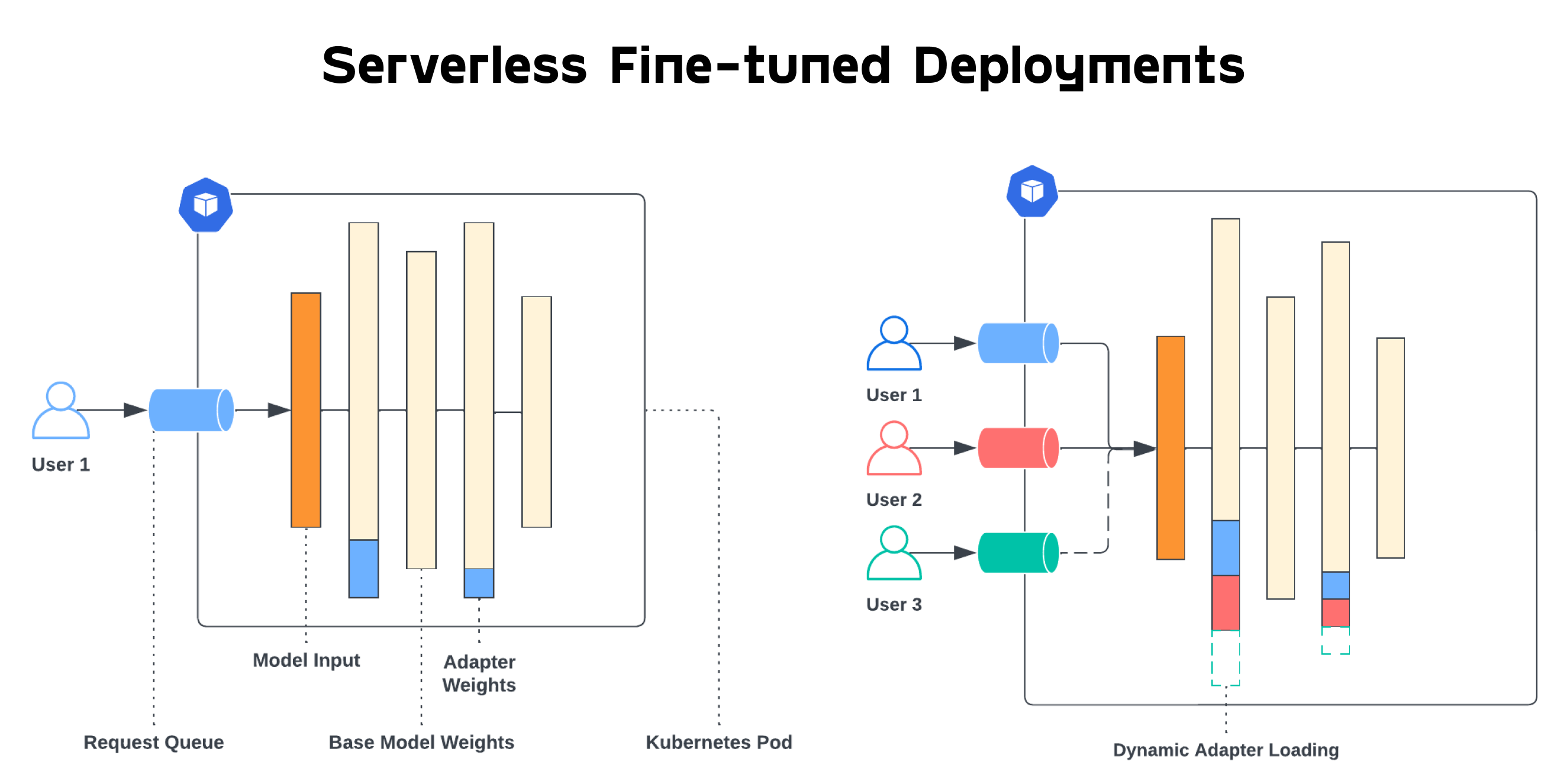

Introducing the first purely serverless solution for fine-tuned

Predibase on LinkedIn: Langchain x Predibase: The easiest way to

Predibase on LinkedIn: #ai #llms #data #largelanguagemodels

Fine-tune Mixtral 8x7b on AWS SageMaker and Deploy to RunPod

images.ctfassets.net/ft0odixqevnv/4SdhWSMbtXvGBAp3

Predibase on LinkedIn: #llama #finetuning #infrastructure #llama2

Devvret Rishi on LinkedIn: How to Fine-tune Mixtral 8x7b with Open

.png?width=1000&height=563&name=Predibase%20Cover%20Image%20(2).png)

Live Interactive Demo featuring Predibase