Iclr2020: Compression based bound for non-compressed network

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

A gradient optimization and manifold preserving based binary neural network for point cloud - ScienceDirect

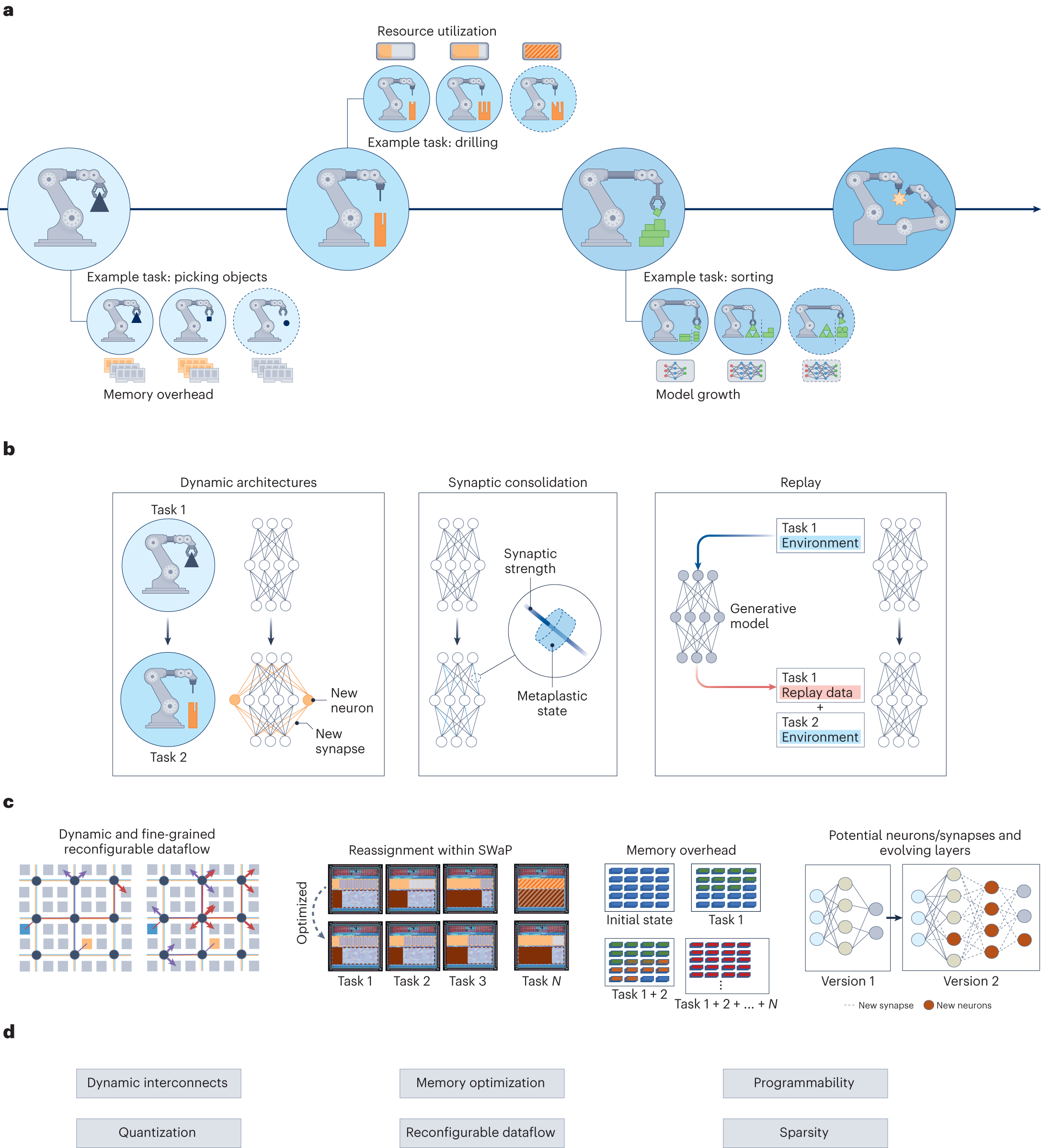

Design principles for lifelong learning AI accelerators

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

Higher Order Fused Regularization for Supervised Learning with Grouped Parameters

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Uncertainty

PDF) Information Bound and Its Applications in Bayesian Neural Networks

NeurIPS 2021 Lossy Compression For Lossless Prediction Paper, PDF, Data Compression

How does unlabeled data improve generalization in self training

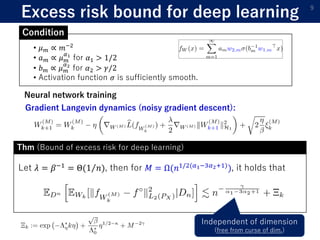

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent

Neural material (de)compression – data-driven nonlinear dimensionality reduction

YK (@yhkwkm) / X

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent