DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

Achieving FP32 Accuracy for INT8 Inference Using Quantization

RuntimeError: Only Tensors of floating point and complex dtype can

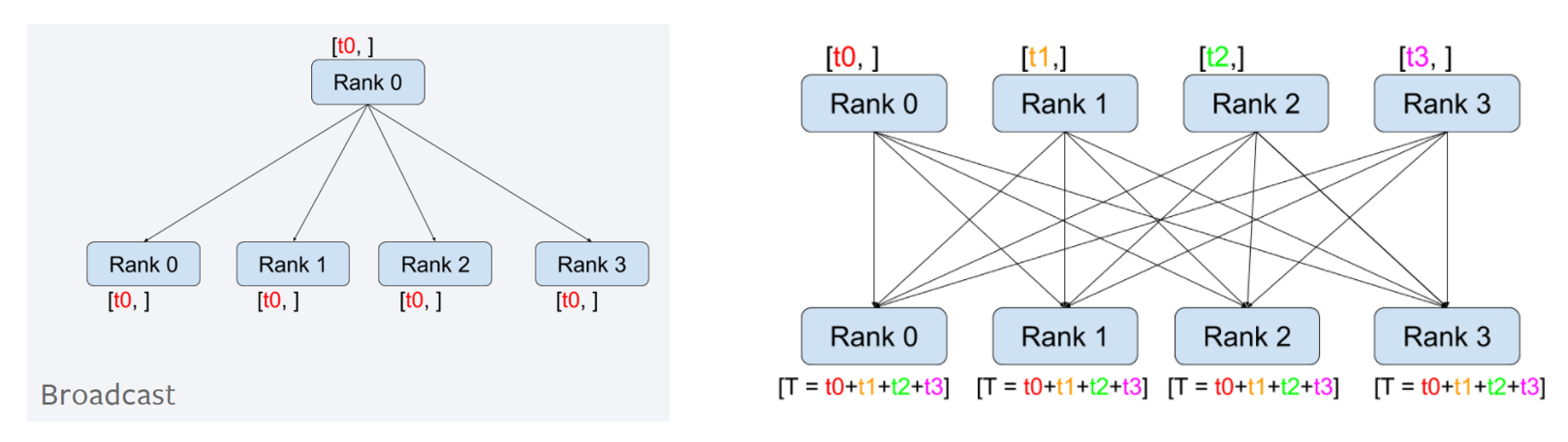

Writing Distributed Applications with PyTorch — PyTorch Tutorials

Distributed] `Invalid scalar type` when `dist.scatter()` boolean

Don't understand why only Tensors of floating point dtype can

DataParallel] flatten_parameters doesn't work under torch.no_grad

Pytorch - DistributedDataParallel (2) - 동작 원리

Question/Possible bug with ddp/DistributedDataParallel accessing a

Inplace error if DistributedDataParallel module that contains a